ここでは、コロナ下のマレーシアの様子を紹介するだす、今回は一部住民規制強化編についてだす(2021/10/6)

はじめに

現在マレーシアでは、新規症例数は、一時期よりは減少傾向にあるだす

したがって、順次規制が解除されているだすが、

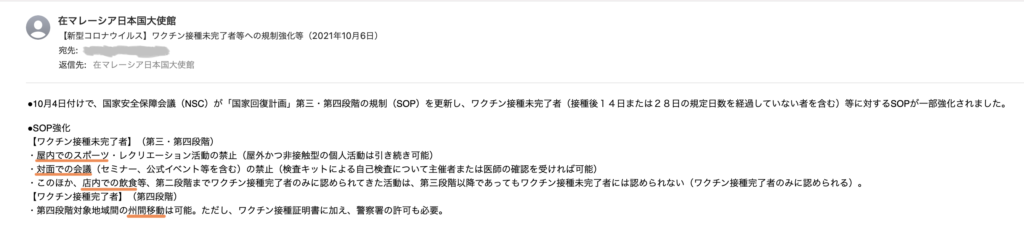

2021/10/6付で在マレーシア日本国大使館から届いたメールには、「規制強化」の文字が!!

よくよく見るとワクチン接種未完了者のみ規制強化との記載

以前までは、ワクチン接種完了者のみ規制緩和との記載だっただすが、

両者比較

簡単に現状のワクチン接種完了者と未完了者を比べてみると

| 接種完了者 | 接種未完了者 | |

| 店内飲食 | ○ | ✗ |

| KL、プトラジャヤおよび セランゴール州間の移動 | ○ | ✗ |

| 観光およびレジャー(映画館など) | ○ | ✗ |

| 衣料店や理髪店など | ○ | ✗ |

| お墓参り | ○ | ○ |

| 宗教活動 | ○ | △ |

たとえ多民族国家で宗教の自由を認めているマレーシアでも、未完了者に対しては規制強化中だす(>_<)

世界では

マレーシア国外ではどうでしょう?

小生にとって衝撃的だったのが、2021/9/29のワシントンポストのYouTubeが反ワクチンの投稿を全て削除するという記事

政府や世論からの圧力を受けて、削除せざるを得なかったわけだすが、

よくよく考えるとすごいことに思えるだす

SNSなどで情報化社会になった際、小生は「有り余る情報から正しい情報を選択する世の中」になったと感じ、これからは情報の取捨選択が重要と思っただすが、

大手SNS会社が、政府などの圧力の影響を受けるとなると、「情報から隠された意味を探す世の中」になったと感じただす

参考原文

YouTube is banning prominent anti-vaccine activists and blocking all anti-vaccine content

https://www.washingtonpost.com/technology/2021/09/29/youtube-ban-joseph-mercola/

The Google-owned video site previously only banned misinformation about coronavirus vaccines. Facebook made the same change months ago.

By Gerrit De Vynck

September 29, 2021 at 1:52 p.m. EDT

SAN FRANCISCO — YouTube is taking down several video channels associated with high-profile anti-vaccine activists including Joseph Mercola and Robert F. Kennedy Jr., who experts say are partially responsible for helping seed the skepticism that’s contributed to slowing vaccination rates across the country.

As part of a new set of policies aimed at cutting down on anti-vaccine content on the Google-owned site, YouTube will ban any videos that claim that commonly used vaccines approved by health authorities are ineffective or dangerous. The company previously blocked videos that made those claims about coronavirus vaccines, but not ones for other vaccines like those for measles or chickenpox.

Misinformation researchers have for years said the popularity of anti-vaccine content on YouTube was contributing to growing skepticism of lifesaving vaccines in the United States and around the world. Vaccination rates have slowed and about 56 percent of the U.S. population has had two shots, compared with 71 percent in Canada and 67 percent in the United Kingdom. In July, President Biden said social media companies were partially responsible for spreading misinformation about the vaccines, and need to do more to address the issue.

The change marks a shift for the social media giant, which streams more than 1 billion hours’ worth of content every day. Like its peers Facebook and Twitter, the company has long resisted policing content too heavily, arguing maintaining an open platform is critical to free speech. But as the companies increasingly come under fire from regulators, lawmakers and regular users for contributing to social ills — including vaccine skepticism — YouTube is again changing policies that it has held onto for months.

“You create this breeding ground and when you deplatform it doesn’t go away, they just migrate,” said Hany Farid, a computer science professor and misinformation researcher at the University of California at Berkeley. “This is not one that should have been complicated. We had 18 months to think about these issues, we knew the vaccine was coming, why was this not the policy from the very beginning?”

YouTube didn’t act sooner because it was focusing on misinformation specifically about coronavirus vaccines, said Matt Halprin, YouTube’s vice president of global trust and safety. When it noticed that incorrect claims about other vaccines were contributing to fears about the coronavirus vaccines, it expanded the ban.

“Developing robust policies takes time,” Halprin said. “We wanted to launch a policy that is comprehensive, enforceable with consistency and adequately addresses the challenge.”

Mercola, an alternative medicine entrepreneur, and Kennedy, a lawyer and the son of Sen. Robert F. Kennedy who has been a face of the anti-vaccine movement for years, have both said in the past that they are not automatically against all vaccines, but believe information about the risks of vaccines is being suppressed.

Facebook banned misinformation on all vaccines seven months ago, though the pages of both Mercola and Kennedy remain up on the social media site. Their Twitter accounts are active, too.

In an email, Mercola said he was being censored and said, without presenting evidence, that vaccines had killed many people. Kennedy also said he was being censored. “There is no instance in history when censorship and secrecy has advanced either democracy or public health,” he said in an email.

More than a third of the world’s population has been vaccinated and the vaccines have been proved to be overwhelmingly safe.

YouTube, Facebook and Twitter all banned misinformation about the coronavirus early on in the pandemic. But false claims continue to run rampant across all three of the platforms. The social networks are also tightly connected, with YouTube often serving as a library of videos that go viral on Twitter or Facebook.

That dynamic is often overlooked in discussions about coronavirus misinformation, said Lisa Fazio, an associate professor at Vanderbilt college who studies misinformation.

“YouTube is the vector for a lot of this misinformation. If you see misinformation on Facebook or other places, a lot of the time it’s YouTube videos. Our conversation often doesn’t include YouTube when it should,” Fazio said.

The social media companies have hired thousands of moderators and used high-tech image- and text-recognition algorithms to try to police misinformation. YouTube has removed over 133,000 videos for broadcasting coronavirus misinformation, Halprin said.

There are also millions of people with legitimate concerns about the medical system, and social media is a place where they go to ask real questions and express their concerns and fears, something the companies don’t want to squelch.

In the past, the company’s leaders have focused on trying to remove what they call “borderline” videos from its recommendation algorithms, allowing people to find them with specific searches but not necessarily promoting them into new people’s feeds. It’s also worked to push more authoritative health videos, like those made by hospitals and medical schools, to the top of search results for health-care topics.

But those methods haven’t stopped the spread of anti-vaccine and coronavirus misinformation. More than a year after YouTube said it would take down misinformation about the coronavirus vaccines, the accounts of six out of 12 anti-vaccine activists — identified by the nonprofit Center for Countering Digital Hate as being behind much of the anti-vaccine content shared on social media — were easily searchable and still posting videos. Wednesday’s policy change means many of those will now be taken down.

Once communities grow and build bonds on mainstream platforms like Facebook and YouTube, they often survive even if they get kicked off those sites, said Farid. Influential figures that get banned on YouTube can slide to another service like Telegram or Gab which have fewer restrictions on content, and ask their followers to come with them.

“These conspiracy theories don’t just go away when they stop being on YouTube,” Farid said. “You’ve created the community, you’ve created the poison, and then they just move onto some other platform.”

The anti-vaccine movement goes back to well before the pandemic. False scientific claims that childhood vaccines caused autism made in the late 1990s have contributed to rising numbers of people refusing to let their kids get shots that had been commonplace for decades. As social media took over more of the media landscape, anti-vaccine activists spread their messages on Facebook parenting groups and through YouTube videos.

When the pandemic hit, and vaccines became a topic that was suddenly relevant to everyone, not just parents of young children, many went looking for answers online. Influencers like Mercola, Kennedy and alternative health advocate Erin Elizabeth Finn were able to supercharge their followings. Some anti-vaccine influencers, including Mercola, also sell natural health products, giving them a financial incentive to promote skepticism of mainstream medicine.

The anti-vaccine movement now also incorporates groups as diverse as conspiracy theorists who believe former president Donald Trump is still the rightful president, and some wellness influencers who see the vaccines as unnatural substances that will poison human bodies. All of the government-approved coronavirus vaccines have gone through rigorous testing and have been scientifically proved to be highly effective and safe.

The company is also expanding its work to bring more videos from official sources onto the platform, like the National Academy of Medicine and the Cleveland Clinic, said Garth Graham, YouTube’s global head of health care and public health partnerships. The goal is to get videos with scientific information in front of people before they go down the rabbit hole of anti-vaccine content.

“There is information, not from us, but information from other researchers on health misinformation that has shown the earlier you can get information in front of someone before they form opinions, the better,” Graham said.

Getting authoritative information in front of people early can help, but fact-based videos often have trouble competing for people’s attention with ones spouting misinformation, Fazio said. “If you’re going to make up something you can make it as compelling as you want.”

YouTube’s new policy will still allow people to make claims based on their own personal experience, like a mother talking about side effects her child experienced after getting a vaccine, Halprin said. Scientific discussion of vaccines and posting about vaccines’ historical failures or successes will also be allowed, he said.

“We’ll remove claims that vaccines are dangerous or cause a lot of health effects, that vaccines cause autism, cancer, infertility or contain microchips,” Halprin said. “At least hundreds” of moderators at YouTube are working specifically on medical misinformation, he added. The policy will be enforced in all of the dozens of languages that YouTube operates in.

Still, there are dozens of past examples of videos going viral even if they explicitly break the company’s rules. Sometimes YouTube only catches them after they’ve been flagged by reporters or regular viewers, and have already racked up thousands or millions of views.

“Like always, the devil’s in the details. How well will they actually do at pulling down these videos?” Fazio said. “But I do think it’s a step in the right direction.”

少数意見の行方

動画やアカウントを削除されたユーチューバーはどこへ行ったのでしょう?

その前にユーチューバーは、お金をGoogleから頂いているだすので、ほとんどのユーチューバーはGoogleからアカウントを削除されるような投稿はしなくなり、情報が偏ると予想されるだす

アカウントを削除された投稿者は、現状まだ圧力がかかっていないニコニコ動画へと移ったと噂されているでヤンス

因みに2021/10/6時点での両者の検索結果を比べて見ただす

※コロナワクチンにて検索

こちらはYouTube

こちらはニコニコ動画

終わりに

小生は、普段からなるべく人に対して差別をしないよう心がけているだすが、

ワクチン差別が起こらぬよう心のなかで祈っているでヤンス

最近のコメント